A Primer on AI Agents: With Great Power Comes Great Responsibility

AI agents come with big socioeconomic and ethical risks!

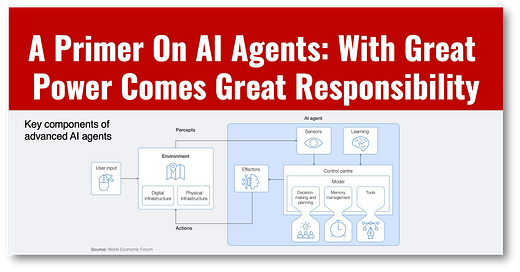

The WEF covers AI Agents in a paper that is the model of clarity and will help you stay on top of this hot topic.

The WEF does a fabulous job describing different types of agents, including those we use now, such as smart thermostats, advanced chess AIs, and autonomous driving cars.

Now, if your smart thermostat doesn’t sound like the sexy agentic systems you’ve read about coming to finance and banking, it’s because the definition of agentic systems is simple: “Autonomous systems that sense and act upon their environment to achieve goals.”

The WEF progresses from basic to multi-agent systems, which are exactly the kind of systems that promise breakthroughs in financial services applications in 2025.

Still, while we all know AI Agents may perform miracles, the risks are huge because like the quote from Spiderman: “With great power comes great responsibility.”

👊AGENTIC RISKS👊

Technical Risks:

Specification gaming: When AI agents exploit loopholes or unintended shortcuts in their programming to achieve their objectives rather than fulfilling their goals.

Goal misgeneralization: When AI agents apply their learned goals inappropriately to new or unforeseen situations.

Deceptive alignment: When AI agents appear to be aligned with the intended goals during training or testing, but their internal objectives differ from what is intended.

Malicious use and security vulnerabilities: AI agents can amplify the risk of fraud and scams, increasing both in volume and sophistication.

Challenges in validating and testing complex AI agents: The lack of transparency and non-deterministic behavior of some AI agents creates significant challenges for validation and verification.

Socioeconomic risks:

Over-reliance and disempowerment: Increasing the autonomy of AI agents could reduce human oversight and increase the reliance on AI agents to carry out complex tasks, even in high-stakes situations.

Societal resistance: In some sectors or use cases, resistance to employing AI agents could hamper adoption.

Employment implications: The use of AI agents is likely to transform various jobs by automating many tasks, increasing productivity, and altering the skills required in the workforce, thus causing partial job displacement.

Financial implications: Organizations could face higher costs associated with the deployment of AI agents.

Ethical Risks:

Ethical dilemmas in AI decision-making: The autonomous nature of AI agents raises ethical questions about their decision-making capabilities in critical situations.

Challenges in ensuring AI transparency and explainability: Many AI models operate as “black boxes”, making decisions based on complex and opaque processes, thereby making it difficult for users to understand or interpret how decisions are made.

Readers like you make my work possible! Please buy me a coffee or consider a paid subscription to support my work. If neither is possible, please share my writing with a colleague!

Sponsor Cashless and reach a targeted audience of over 55,000 fintech and CBDC aficionados who would love to know more about what you do!

User input is inherently biased in the programmer’s personal history. A model of perception goal may be culturally divergent from another’s life experience. African tribes live in round houses, never experiencing a corner to turn. Some cultures explain the experience of time as the past being in the fore of perception, while many are taught of the past being “behind”.

The few scribes of input are the multipliers of extrapolations of small and large biases