One-Third Of The C-Suite Prioritizes AI Innovation Over Responsibility

The stunning result shows that "responsible AI" is likely a myth.

This is my daily post. I write daily but send my newsletter to your email only on Sundays. Go HERE to see my past newsletters.

It’s time to shatter your belief in responsible AI with one of the most consequential AI surveys I’ve ever seen, as a full 1/3 of C-suite executives surveyed prioritized AI innovation over responsible conduct!

Shocked? Don’t be. The siren call of innovation for many in the C-suite is irresistible. It again shows that responsible AI is a myth, as an AI gold rush mentality prioritizes results at any cost, including over ethics.

In the survey, responsibility included “ethics, safety, sustainability, and inclusiveness” and was conducted on 2300 GenAI decision-makers (70% at the C-level) in 34 countries.

Perhaps we can find limited solace that an oppositely minded 1/3 prioritize responsibility over innovation?

Maybe, but that leaves the final 1/3, who, in my view, tip the balance toward the negative by prioritizing innovation and responsibility as equals.

Don't expect these executives to imbue AI with an ethics or safety-first attitude that most consumers would demand.

This is a sad outcome for responsible AI use, which will impact all citizens, who are now the guinea pigs for AI innovation.

So, who can we trust and rely on for “ethical, safe, sustainable, and inclusive” AI?

I don’t know, but you are gravely mistaken if you thought it would flow naturally out of the C-suite’s sense of natural responsibility for humanity or stewardship of their company.

👉WHO WILL BE RESPONSIBLE FOR AI?

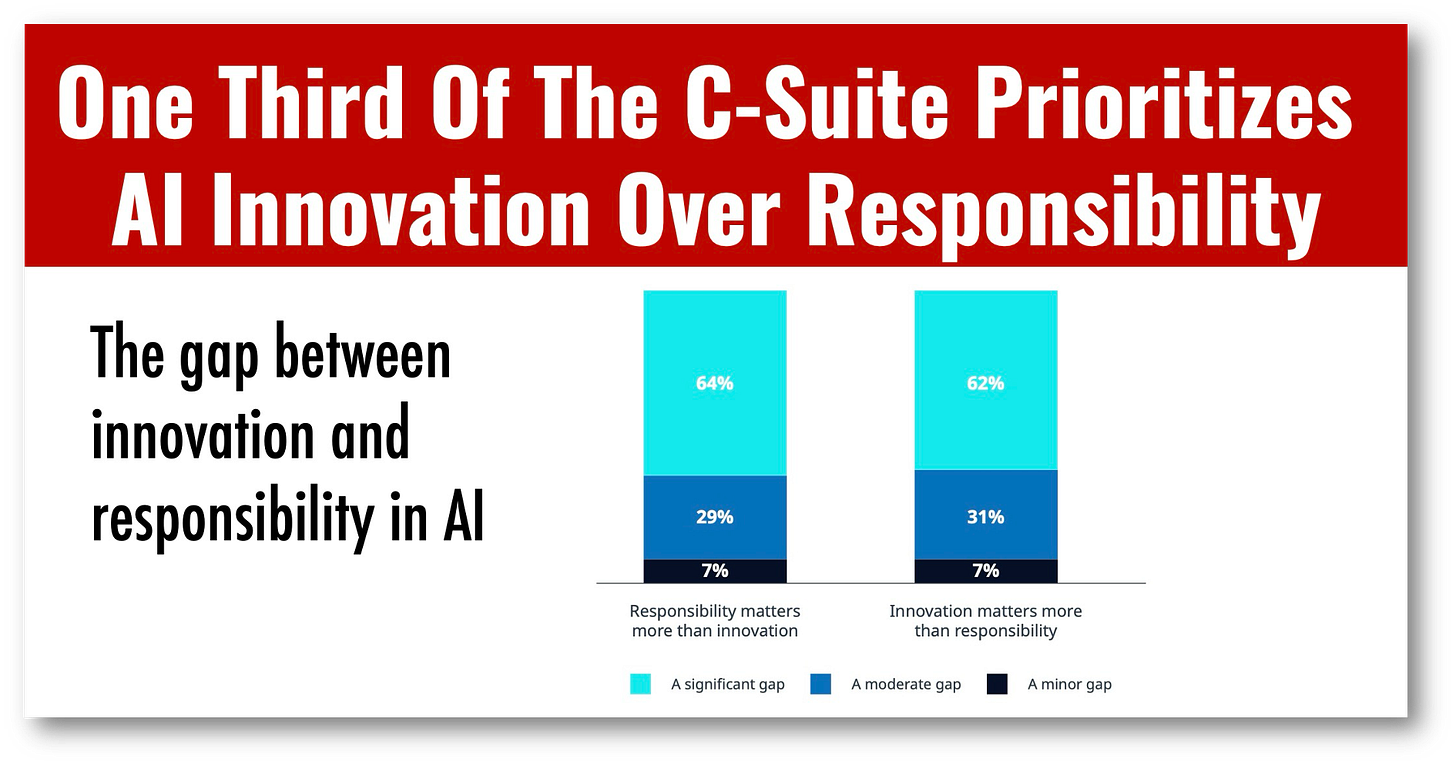

🔹 1 in 3 in the C-suite say responsibility matters more than innovation. Nearly 1 in 3 say innovation matters more, and the remainder say innovation and responsibility are equally important.

🔹 >60% of C-suite executives describe the extent of the gap between innovation and responsibility as significant.

🔹 >80% of organizations say leadership guidance on balancing GenAI innovation with ethical and moral responsibilities is very important — and the number seeing this guidance as crucial rises as investment increases.

🔹 89% of the C-suite are very concerned about the potential security risks associated with GenAI deployments; just 24% of CISOs strongly agree that their organization follows a robust framework to balance risk and value creation

🔹 43% of C-suite members who agree that innovation matters more than responsibility say government and industry guidelines and/or policies on responsibility are unclear.

🔹 >8 in 10 of all respondents say government regulations on GenAI are unclear, which stifles innovation and hinders investment. Most expect spending on GenAI-related regulatory compliance to increase.

Rich, thanks for bringing this information to light. However, calling responsible AI a "myth" is an exaggeration. The fact that 1/3 of the respondents who say ethics is important balances the 1/3 who fall on the side of innovation. (And it doesn't mean that group doesn't prioritize ethics; just that their focus on innovation outweighs it.) Plus, the middle 1/3 that holds innovation and ethics in tandem gives me hope that balance can lean in a positive direction.

Sure, I'm biased. I admit that. It's why I preach responsible AI use in my weekly newsletter. The survey results put even more fire in my belly to continue. Again, thanks for shedding light on this issue.