Reality Check: GenAI in Banking, Will Banks Own the Errors?

Air Canada shows that customer chatbots pose real liabilities

Stop telling me how GenAI will transform banking; instead, tell me how banks will own the liability these new systems create!

This otherwise excellent BCG report and so many others miss this!

Need an example of chatbot liability?

Air Canada was recently asked to give a partial refund to a grieving passenger after its chatbot inaccurately explained the airline’s bereavement policy. Here

The chatbot lied: “If you need to travel immediately or have already travelled and would like to submit your ticket for a reduced bereavement rate, kindly do so within 90 days of the date your ticket was issued by completing our Ticket Refund Application form.”

The airline’s policies state this is not permitted!

The $650 refund ordered by the court may not sound earth-shattering, but you can be sure that banks contemplating customer service chatbots just watched this verdict with interest.

Air Canada argued its chatbot should not be liable because "the chatbot is a separate legal entity that is responsible for its own actions."

If that sounds terrifying, it is!

It shows just how far the airline was willing to go to avoid liability for their AI chatbots, and you can bet banks will be no better.

👉TAKEAWAYS

🔹 GenAI is a transformational technology for banks; this isn’t in doubt, but we need to see more of how banks will own the errors these machines will make.

🔹 Banks face real liabilities with customer-facing chatbots, and you can bet that, just like Air Canada, they will do everything they can to avoid paying out.

🔹 Until banks work out exactly who owns the liability for a chatbot gone rogue, you can bet that GenAI progress will be slower than advertised.

🔹 This is why most of the reports on GenAI transforming banks, like this one, fail, they sell AI hype and not the reality of AI liability.

🔹 This is not about being anti-GenAI but being realistic about banks sorting out who owns the mistakes it will invariably make, just like Air Canada’s.

🔹 Air Canada’s chatbot is now offline. How soon before a bank does the same?

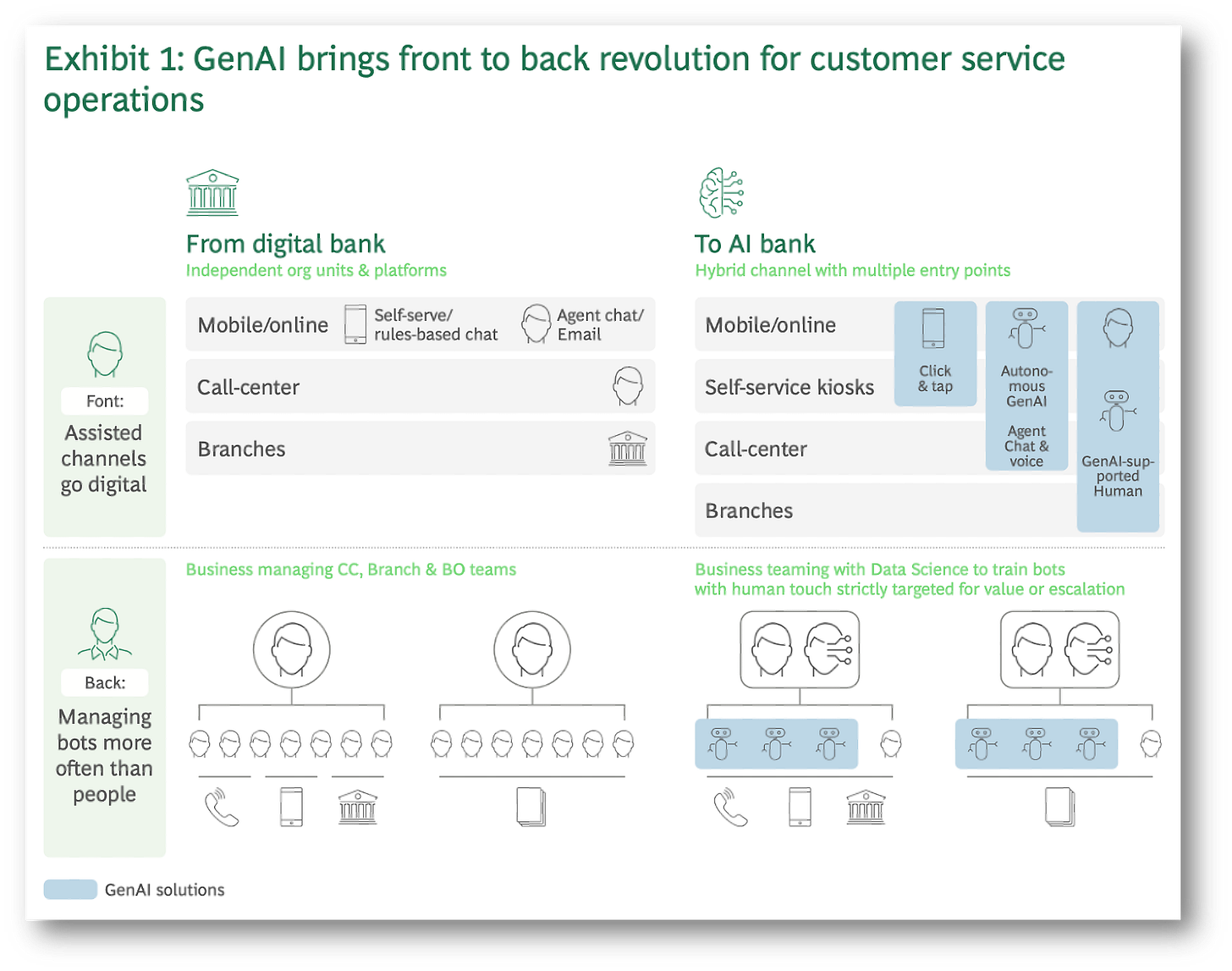

What is missing in this diagram is an analysis of the liabilities that banks will incur when their GenAI chatbots make a mistake!

GenAI is clearly a transformational technology, but the liabilities created by its use are not the same along the customer journey.

👊STRAIGHT TALK👊

Banks will face significant liabilities for GenAI chatbots, and banks need to own them before they set them loose on customers.

Can you imagine a bank’s customer chatbot misquoting bank rates or misrepresenting their products?

I can, and you can bet that banks like Air Canada will use crack legal teams and lengthy chatbot disclaimers to avoid responsibility.

While Air Canada showed how not to handle a chatbot mistake, I don’t think it's a stretch to think that banks, when confronted with much more expensive errors, will try similar strategies to avoid payment.

What BCG and other consultants should be doing instead of overhyping GenAI is to coach banks on managing the liabilities they create.

Now, just in case you think GenAI chatbot errors are rare, look to ChatGPT, which just this week “spouted gibberish.” Here

Thoughts?

Hey got anything to say about this article? I and others in our “Cashless” community would love to hear it!

Don’t pull your punches tell me why I’m right or wrong!

Leave a comment!

A big thank you to all my subscribers! I appreciate your notes telling me how much you enjoy reading the Cashless newsletter.

Join them by subscribing! You’ll be glad you did!